Unsupervised Learning using Gaussian Processes

Motion capture data is very high-dimensional: an individual human pose is typically parameterized with more than 60 variables. However, we suspect that most “human” poses live on a lower-dimensional manifold within that high-dimensional space.

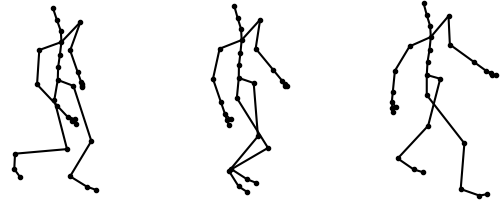

Frames from a motion capture of a subject walking

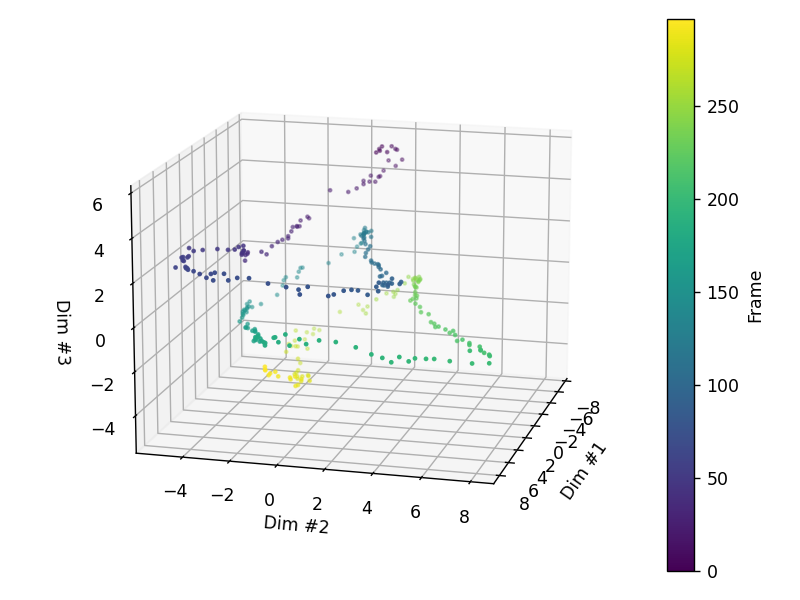

The Gaussian Process Latent Variable Model (GP-LVM) is a probabilistic non-linear dimensionality reduction method that uses Gaussian Processes. We can use this model to find a lower-dimensional representations of motion capture sequences and mappings between the latent space and the observed space. For example, we can reduce the motion capture shown above to a trajectory in three dimensions:

Latent representation of a walking motion capture sequence

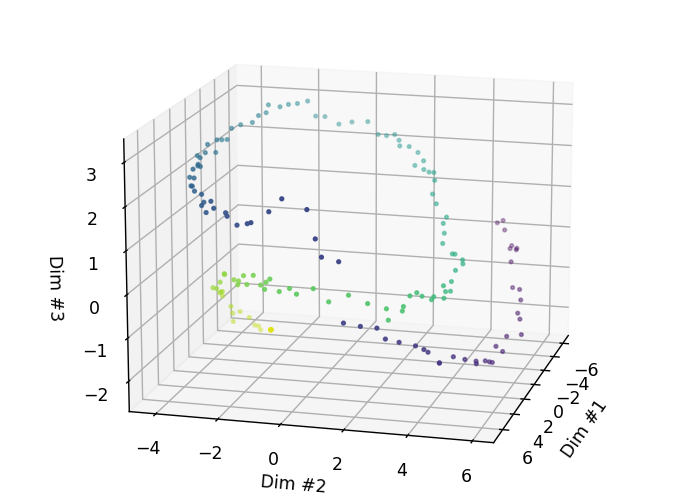

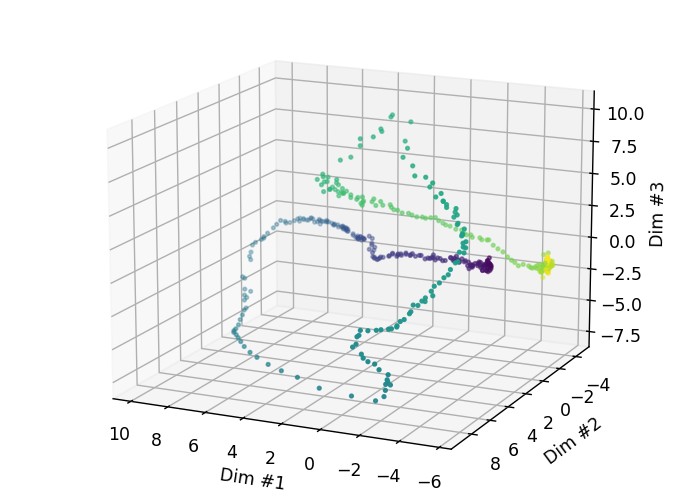

For many human activities, three dimensions is sufficient.

Running

Jumping

Finally, we can use the learned mapping between the latent space and the observed space to create novel motion sequences. We create a simple trajectory in the latent space and map it back to the observed space:

Novel latent trajectory and corresponding observed space

This work done during my master’s degree at University of Cambridge.